Ethical vs. Unethical AI In Music

Dec 23, 2024

Michael "MJ" Jacob

CEO & co-Founder

Imagine it’s 2030. AI Music Technology has advanced to a point where it creates breathtaking music and art without any human involvement. The creativity credits and compensation all go to “AI technology,” leaving artists sidelined. Creative incomes have disappeared, and we’re left wondering—how did we let this happen? This is why Ethical AI matters.

How we got here

First of all, AI is not new. AI has been around since the 1950s.

The world of 'Generative' AI is where all the buzz is, yet the fundamentals of this technology are hardly 'new' as of 2024. The Transformer model (which is what the ‘T’ in chatGPT stands for) was invented and open-sourced by Google in 2017.

Fast forward to 2024, Generative AI (Machine Learning) has gotten so good that we use it in our every day life. Using tools like ChatGPT, Midjourney, to even large companies embedding AI into their existing products - Adobe, Spotify (Playlist creator), the list goes on.

However, companies are also starting to be cautious (for good reason) in how they position themselves with using AI, and the root has to do with this buzz word floating around called “Ethical AI”.

In this article, you will learn all about the differences between Ethical and Unethical AI in the music industry landscape. As well as how this will shape the future of AI in music production.

Who am I?

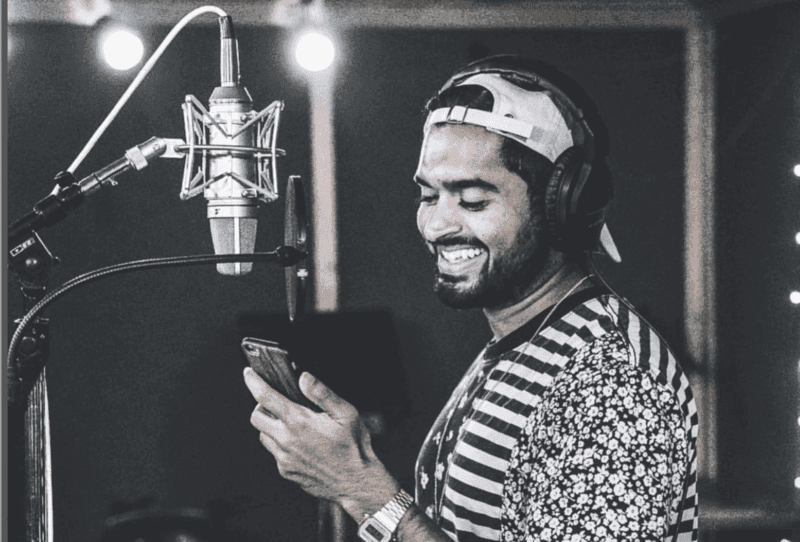

First and foremost, my name is MJ and I'm the CEO and Co-Founder of Lemonaide. I'm a musician and builder in the AI technology space.

On the creative end, I've performed and headlined live shows, and accumulated millions of streams. Thank God for music—it's saved my life in countless ways, especially given the challenges of a tough childhood.

My Journey with AI Technology

If I look back at the past 8-10 years, we can summarize my journey in AI with 3 phases:

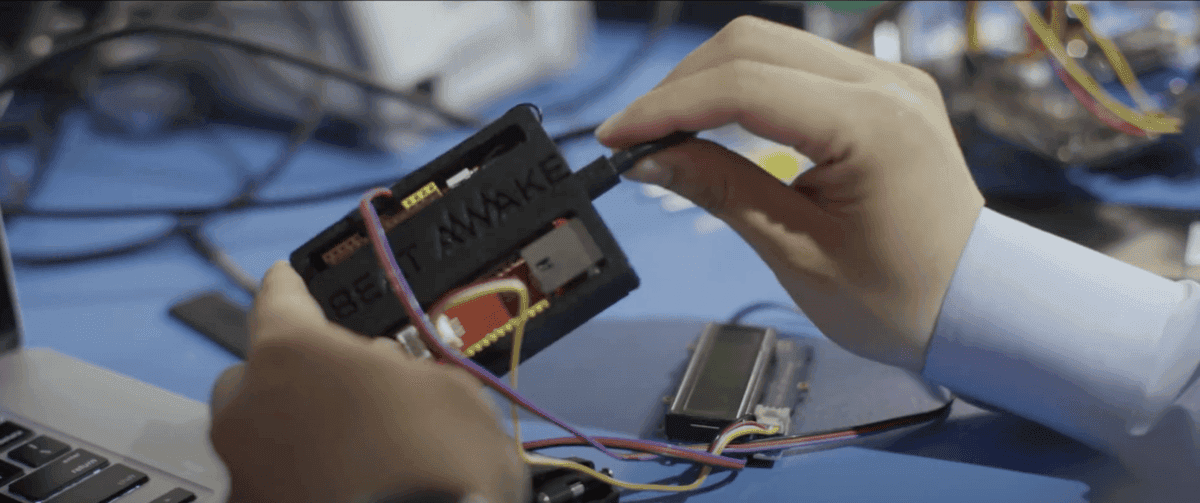

Staying Awake At The Wheel: My first AI Prototype

My journey with AI began in 2015, during the first wave of its growing influence. It was then that I built my first prototype: a system designed to prevent drivers from falling asleep behind the wheel.

This early project not only fuelled my passion for AI but also taught me how transformative technology can be when applied to solve real-life problems."

Here's a quick video explaining the concept in more detail, if you're interested.

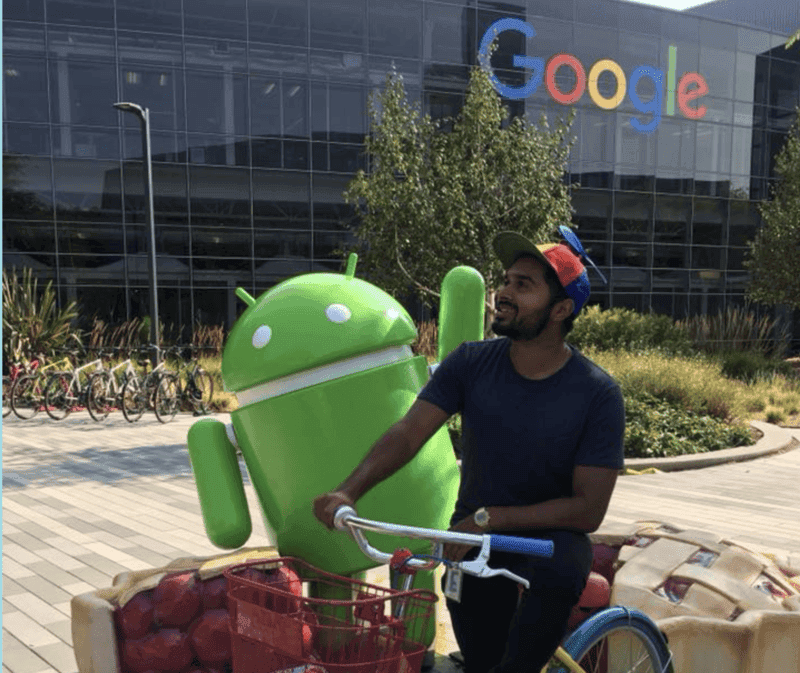

Working at Google

The second wave of my AI journey began with an incredible opportunity: being offered a job at Google, thanks to the first wave and the success of my initial prototype aimed at preventing drivers from falling asleep behind the wheel.

This early project not only opened doors but also set the stage for my next chapter.

At Google, I spent five transformative years immersed in the world of AI, learning how it seamlessly integrates into the Google Cloud Platform.

From building scalable solutions to mastering the intricacies of machine learning and data processing, I developed a deeper understanding of how AI can be used to solve real-world challenges at scale.

Collaborating with some of the brightest minds in the industry, I explored how cloud-based AI empowers businesses and developers worldwide, cementing my passion for innovation and impact.

The birth of Lemonaide: Merging my passion for Technology & Music

In the third wave of my AI journey, I set out to merge two worlds I was deeply passionate about: artificial intelligence and music. I wanted to explore how these seemingly distinct fields could come together to enhance creativity and transform the artistic process.

This vision led to the creation of Lemonaide, which was the manifestation of bringing this fusion to life.

The Three Pillars of Music AI: Training, Modeling, and Generating

As I started learning more about AI, which really the main topic is machine learning, I realized that it all comes down to these 3 steps:

Training Data: This is the data (text, image, video, etc.) that you want an AI model to learn from

The Model: This is the actual “machine learning model” that you would select or build, which has the best chance of learning from your Training Data (see example)

The Inference (aka Generation): Once your ‘Model’ is finished learning from your Training Data, you ask it for ‘predictions’. This is typically the interaction point an end-user has with AI technology.

So back in 2020, when I dabbled with AI and Music for the first time, I realized just how powerful of a difference the Training Data makes.

Especially, when it comes to how powerful the AI model can become in a musical context.

I’ll dive into the technical details in a moment, but first, it’s important to understand one thing: I’m a musician first and a technologist second—as you’ve probably gathered by now.

This perspective has been invaluable throughout my career, shaping the way I approach problems and innovate. Even today, this unique combination remains one of our biggest advantages.

As you continue reading, you’ll notice that my vision of Ethical AI is deeply influenced by this blend of artistry and technology.

If you’re an AI scientist or a non-musician, some of my approaches may seem unconventional—though for others, they may make perfect sense. It’s this fusion of perspectives that drives my belief in using AI as a force for good in the creative world.

Alright, moving on! 🙂

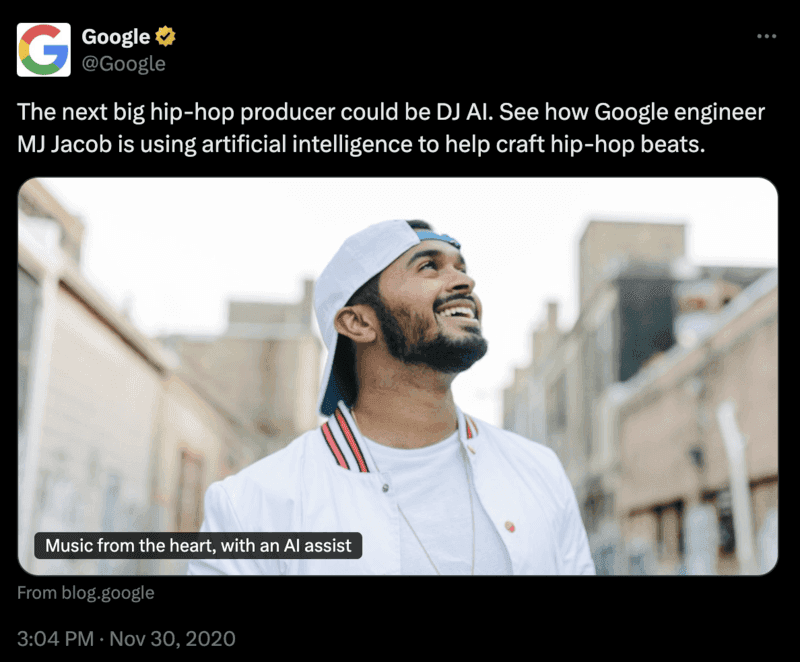

Experimenting with AI music models back in 2020

In 2020, I trained my first AI Music Model, it was capable of generating Melody MIDI and Drum MIDI that I could easily drag into my DAW as a ‘starting-point’ (which was especially helpful for someone like me who does not know music theory).

So, as a Musician first, technologist second, I immediately had a ‘hunch’ that in order to build a Machine Learning model, the ‘Training Data’ would be something that I would need to get consent from other artists from.

To be clear, at this time, there was very little discussion of the buzz word ‘ethical AI’, however, intuitively, as a human, Artist musician who understands what it takes to create music — I naturally understood the ethical dilemma. It wasn’t that hard, it was intuitive.

So, I trained my first algorithm (an AI MIDI Generator), on my friends data who I got permission from, and quickly realized just how powerful an algorithm can get if you feed it quality music.

It was capable of generating MIDI Patterns, Drum Patterns, and more.

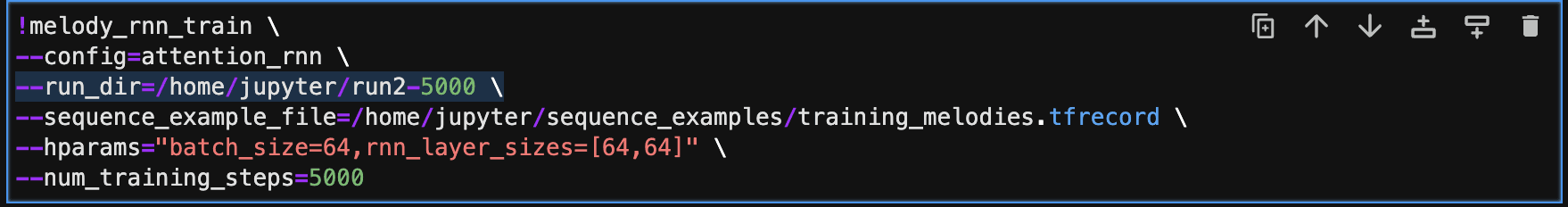

Within that algorithm I trained, there was ‘one-line-of-code’ which was the make or break of the ethical dilemma:

For our non-techies, what this line of code does is simply pointing to a folder which holds the dataset that the AI model will then learn from.

For our non-techies, think of it like baking a cake. This line of code is like choosing the ingredients from your pantry—the AI model (like the baker) uses these ingredients (the dataset) to create the final cake.

If the ingredients are stale, unhealthy, or unbalanced, the cake will turn out just as flawed.

Similarly, the quality, diversity, and ethics of the dataset directly determine the AI’s output. This single line of code, pointing to the folder holding the dataset, has profound implications for the model's behavior and the ethical challenges it might pose.

To be honest, it’s a little scary how this technology can put so much power in the hands of developers and AI scientists, without having true safety mechanism to verify how the model was trained.

This is exactly how I realized I needed consent from my friend who gave me access to the data. All for the sake of keeping things ethical.

Example of unethical use of Music AI

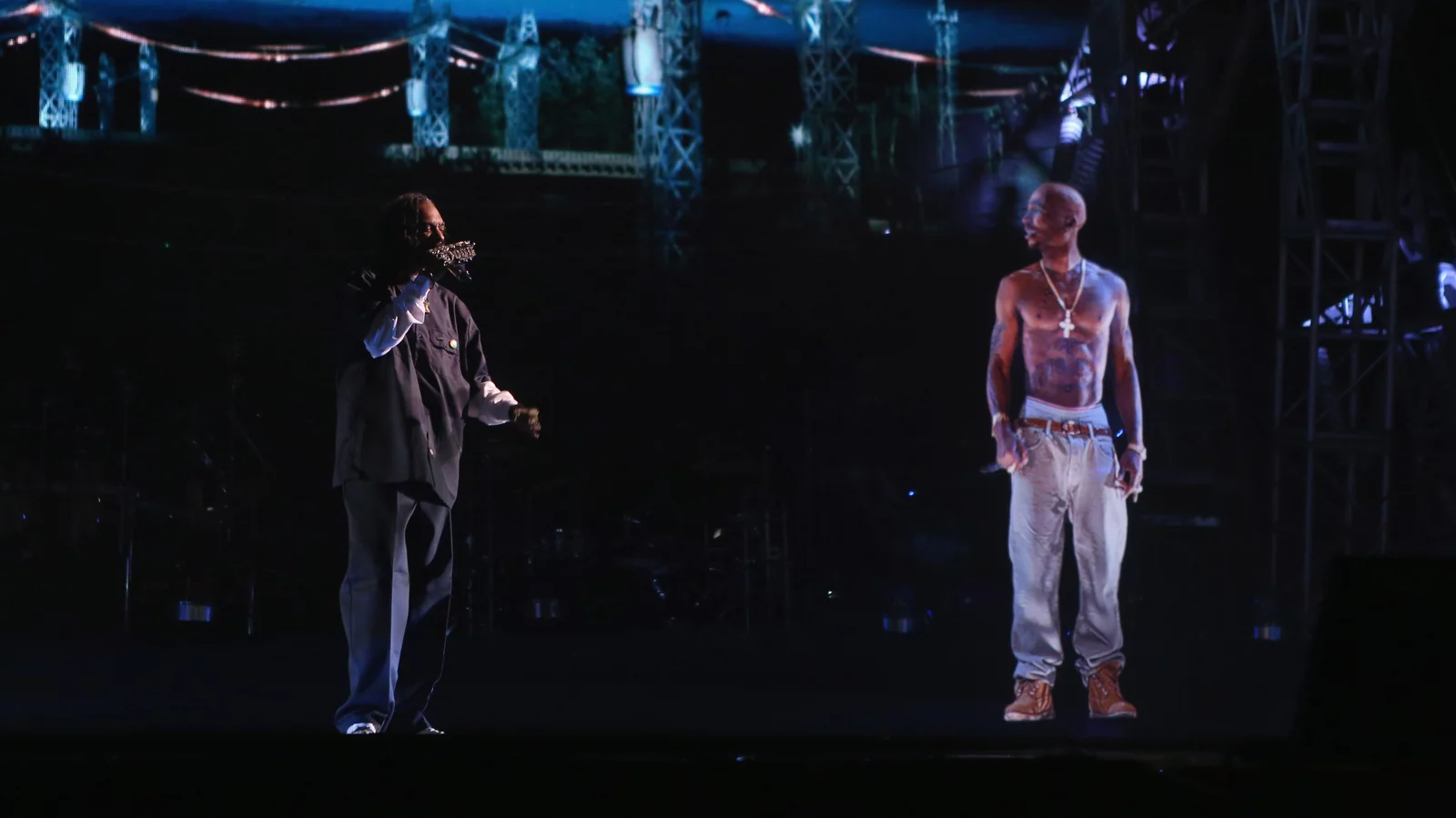

Let me give you a quick example. Have you ever seen that clip on your feed featuring Drake using Tupac’s voice for generating a Diss Track to Kendrick? Here’s the story if you haven’t.

This is the very definition of unethical AI.

Think about it — do you really believe Tupac would be okay with this? It’s not just an infringement on intellectual property; it’s a profound violation of their identity and creative essence.

It strips away the authenticity of their work, reducing it to something they never consented to or created, and that’s a deeply personal breach.

Through building my very first AI music model, this understanding became my main takeaway and set the vision for the future. This concept is not just an isolated concern, it’s the foundation upon which the remainder of this article is built.

Understanding the ethical implications of AI in creative fields is crucial if we are to ensure that technology empowers rather than exploits artistry.

This is the real definition of Unethical AI.

If you want to learn more about my process of building this model, I actually published this article through Google Magenta here. You can find all the details of what I built and how it works over there.

Defining Ethical AI in Music: Permission, Fairness, and Value

Now that we know what unethical AI looks like, let’s talk about what is considered ethical.

Fast forward to today, 2024, and there are countless companies in the AI Music space.

There’s the clearly unethical silicon valley approaches of Suno & Udio

There’s the ethical standard define by Fairly Trained

And there’s a vast array of companies in between

The truth is, it’s still a grey area. Some companies take advantage of this—whether to jump on the AI hype train or because there aren’t enough clear rules or guidelines in place yet.

Permission

Here's a quote from Ed Newton Rex, the CEO of Fairly Trained Defines Ethical AI

There is a divide emerging between two types of generative AI companies: those who get the consent of training data providers, and those who don’t, claiming they have no legal obligation to do so. (reference)

I generally agree with this statement. However, I believe it goes beyond simply obtaining permission from data providers.

Fairness

In the context of music AI, it’s about respecting creators who have spent years perfecting their craft. They deserve to be treated fairly, not just because regulations may require it, but because it’s the right thing to do.

This perspective, of course, comes from my creative, musical side and a deep passion for the art of music itself. It’s this passion that drives me to believe there’s something even more fundamentally important at play here—something that goes beyond permissions and regulations.

Value

Here's my definition of ethical AI:

Ethical AI starts with the Training Dataset, ensuring that the catalogue of Art you are training has the proper permissions and consent from the original creator(s). (Permission)

It’s also crucial that those who provide their data are compensated fairly for their contribution to any AI model being created.(Fairness)

But we have to take it a step further. It’s not just about getting permission, it’s about figuring out how to not ‘devalue’ music at the same time.

Will AI replace musicians, producers, and artists in the future?

Let’s address the elephant in the room… The one thing keeping music creatives up at night as AI music technology continues to evolve at breakneck speed.

"Will AI replace musicians, producers, and artists in the future?"

Let’s start off by imagining a world where AI ‘devalues’ music so this can click a little more

Ok, hypothetical scenario…

An AI company named ‘Bruno AI’ asks for consent from a bunch of musicians for a ‘cool new AI tool’ - Let's imagine you're one of those artists that agrees (not deeply understanding the repercussion of this agreement.)

Bruno AI now has access to this data, without restrictions, and can build AI models that’s capable of generating full songs / genres / music

Bruno AI is now able to make music in a very similar style of music as you - to the point where a consumer in the future, may decide its easier for them to listen to Bruno’s AI instead of your music, because it can generate in your style, and it’s a cheaper subscription than buying music directly from you.

This is where the ‘Devalue’ comes in

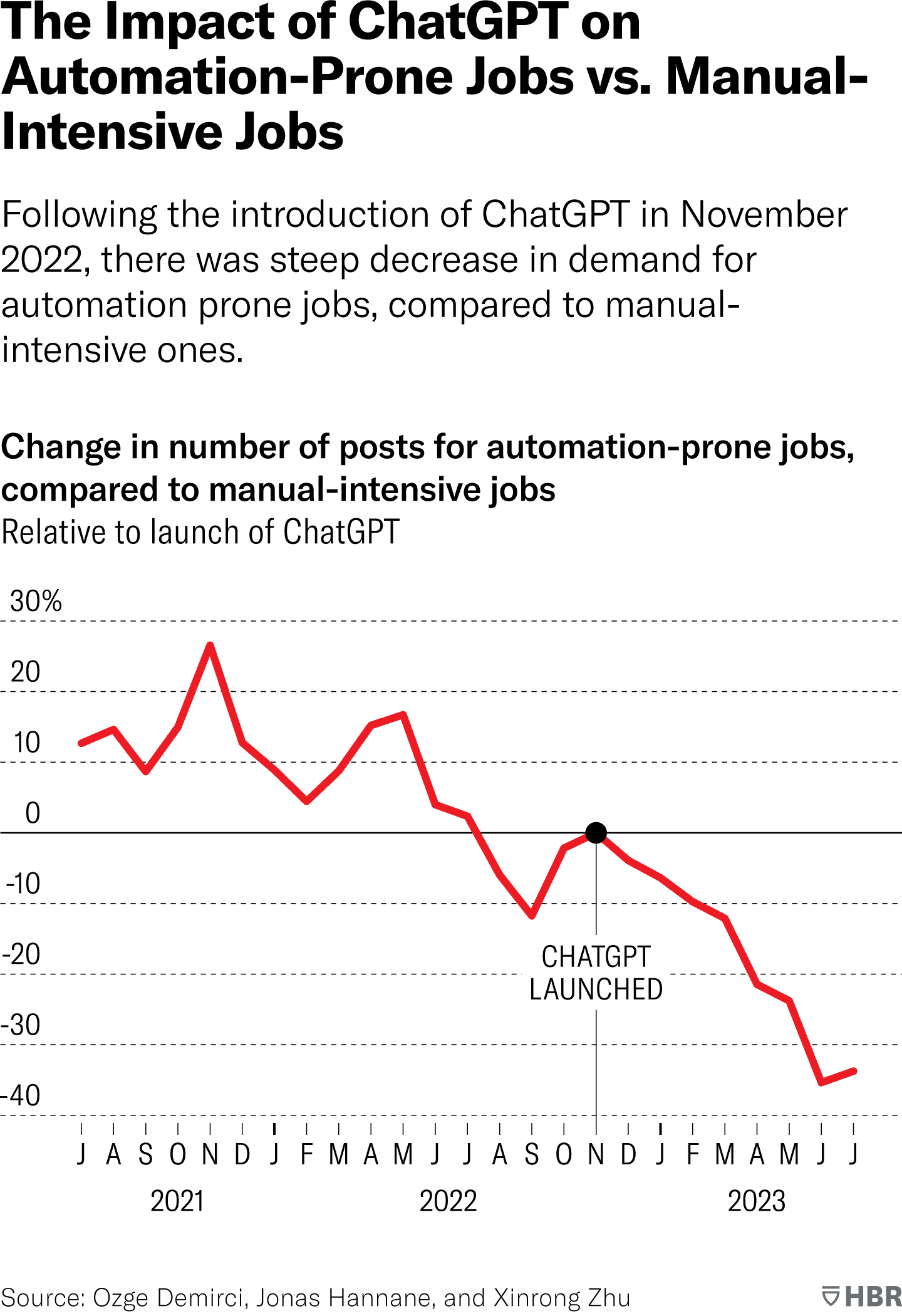

An additional note, the Harvard Business Review just published a piece calling out AI already decreasing the number of job opportunities in creative industries, stating:

“Within a year of introducing image-generating AI tools, demand for graphic design and 3D modeling freelancers decreased by 17.01%.”

Taking a step back… Based on the existing definition of Ethical AI — did Bruno do what they needed to in 2024?

Yes! They got consent from artists!

But, the long-tail impact of what ‘Bruno AI’ is doing with this data may end up replacing large forms of income for the Artist

So, evolving the definition of Ethical AI, from my point of view.

Ethical AI starts with the Training Dataset, ensuring that the catalogue of Art you are training has the proper permissions and consent from the originating creator of that Art, as well as considering the long-term impact of compensation to the originating creator of that Art.

What’s this mean for you? As a business, or as an Artist?

If you’re a business:

Whether you’re considering partnering with AI companies, or building your own models:

Be thoughtful of your approach on who you partner with, and the questions you ask about how a company trains their model.

Following the principles of Fairly Trained is a great start

Never be afraid to ask the question point-blank - “what is your training dataset?”

Consider building a business model, that allows for AI technology to grow, and as it grows, the ‘originating training dataset contributors’ also has continued growth.

This article here does a solid job considering some strategies

If you’re an Artist / Musician / Producer / Creative:

There’s a vast potential for creatives to generate passive income through AI:

Lemonaide has paid 10s of thousands of dollars already to creatives already, to use their music ethically part of our training process, and we clearly are not alone.

There’s a great chance for you to continue to make money using your ‘main’ sources of income (Streaming, Sync Licensing, whatever) - and use that same catalogue as passive income for leasing it to an AI company as well.

With any land of opportunity, just be careful.

Get to know the companies ethical standard on their website if they have one

See if you can chat with someone at the company - if you email support@lemonaide.ai - you will guaranteed get a response in detail from someone on the team, with the founding teams visibility and responses as well

The least AI companies can do these days is offer this - and if they aren’t willing to communicate, you shouldn’t be willing to share your data

To understand what’s going on with an analogy to an artist - AI companies can act like a ‘predatory music label’.

There’s SO many resources now on ‘not signing a record deal’ without the proper knowledge and legal advice that it feels common knowledge.

This same concept applies to working with AI and Music companies.

We need to guard against predatory music labels using superficial "consent" from artists as a way to falsely claim their actions are ethical.

If there’s a recurring revenue deal on the table for you, and it seems fair, take it! But if a company is saying “We want consent to use your data for whatever we want” - that’s when you should be very careful

Ending on an optimistic note

I can’t speak for others, but I do believe there is a world where AI tools can advance in quality that truly helps creators do things they could never do before, while protecting and attributing proper credit / compensation back to the original copyright owners as AI Music Technology scales.

It’s not an easy optimistic future to create, but I’m grateful I get to work at Lemonaide and give it a shot!

Recommended watch

Wanna learn more about ethical AI in music technology? Check out this YouTube video I made that goes deeper into the subject.